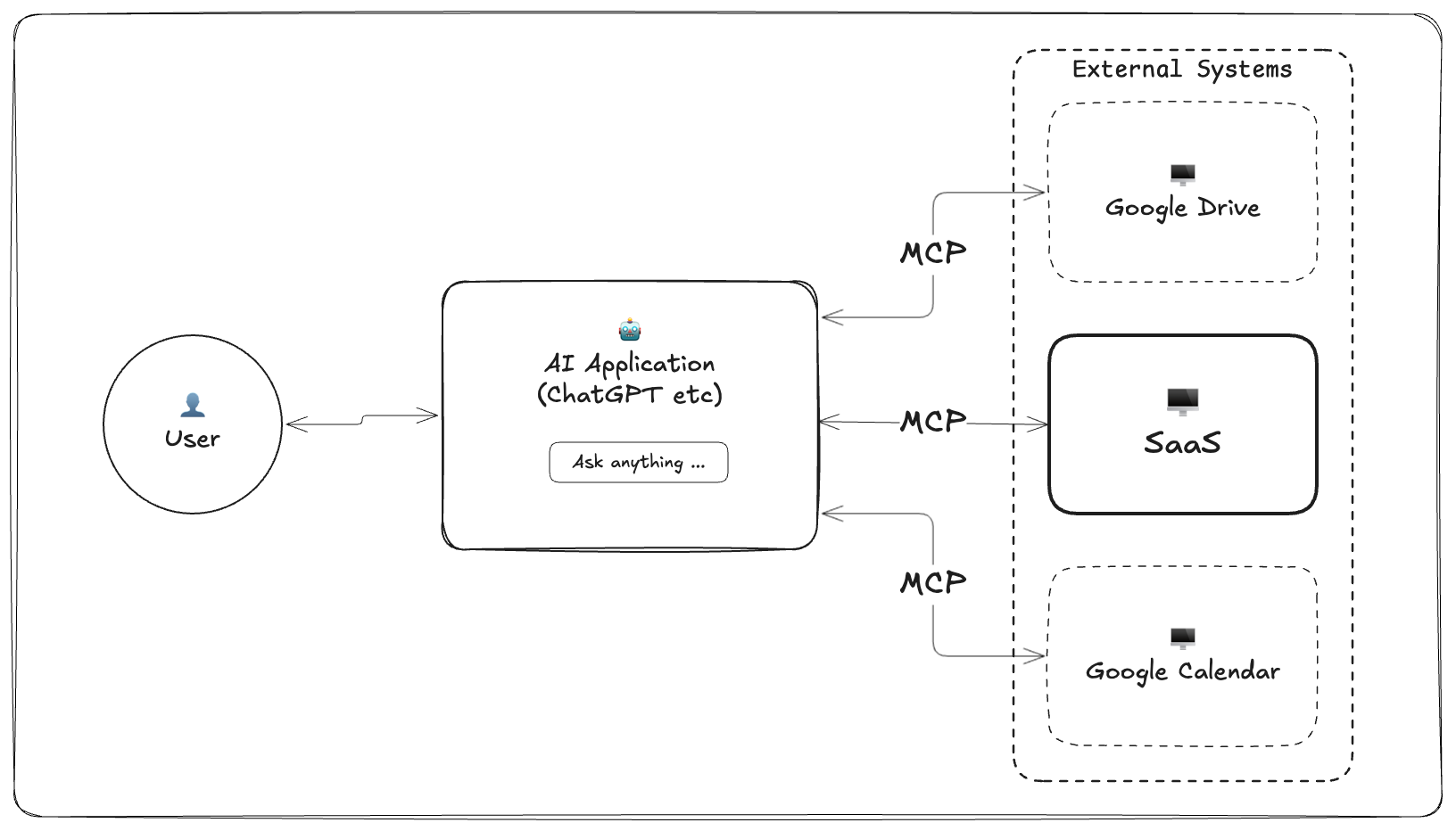

MCP stands for Model Context Protocol and it’s simply a standard for connecting external systems to AI apps.

API as in Application Programming Interface? … No.

| API | MCP | |

|---|---|---|

| Audience | 👨💻 Developers | 🤖 AI Apps |

| Purpose | 🖥️ ↔️ 🖥️ Connects applications to applications | 🖥️ ↔️ 🤖 Connects applications to AI |

| Interaction | 🔄 Request - Response | 💬 Multi-turn, conversational |

MCP servers are a great way to make your existing product AI-ready fast.

Being AI-ready will provide you with new growth opportunities as new user behaviour changes from click-driven workflows to conversation-driven assistance.

| Product Metrics | Business Metrics | How |

|---|---|---|

| Activation ⬆️ | ARR ⬆️ | Just ask AI = faster time-to-value. |

| Engagement ⬆️ | NRR ⬆️ | AI keeps users exploring. Low barrier to entry. |

| Retention ⬆️ | LTV ⬆️ | Consistent utility — keeps users coming back. |

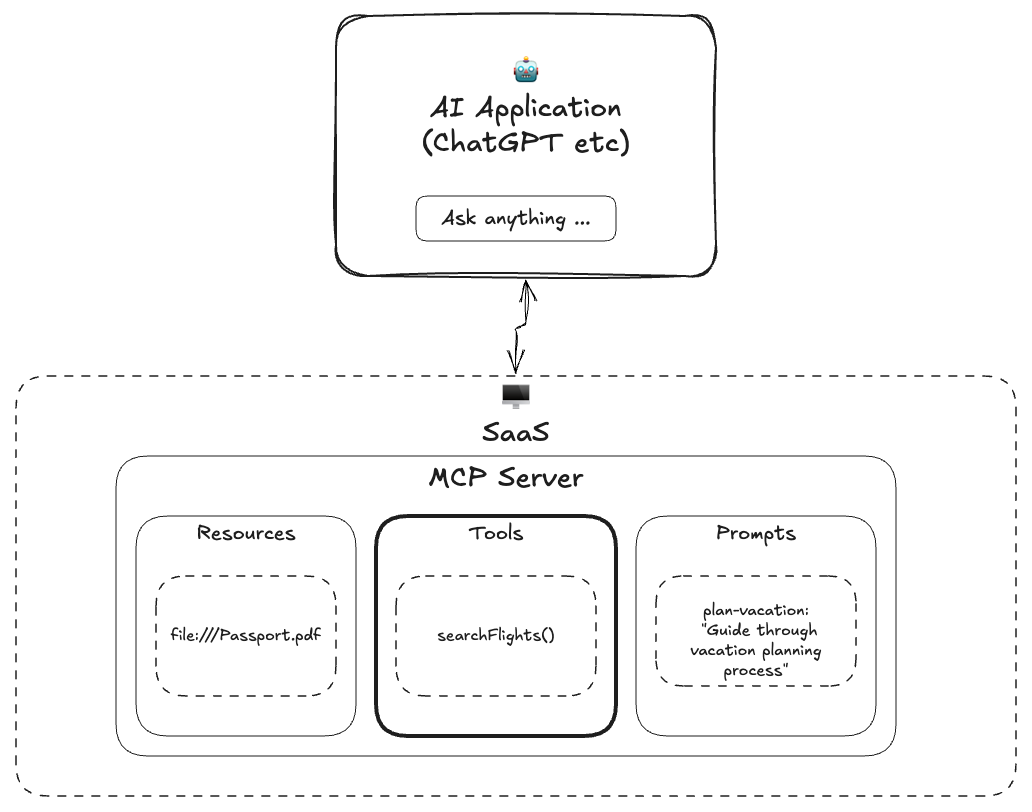

MCP servers are made up of 3 blocks. The 80/20 of MCP servers is just tools. You can layer on the Resources/Prompts later as needed.

| Block | Mode | Role |

|---|---|---|

| 🔧 Tools | Active | Trigger actions |

| 📦 Resources | Passive | Provide knowledge |

| 💡 Prompts | Directive | Guide usage |

If you’re interested in the technical details, Cloudflare have a great guide on how to build and deploy your first remote MCP server.

You can always use the Inconvo API within tool calls if you want to build, deploy and manage your own MCP server.

Well-known AI applications with MCP clients:

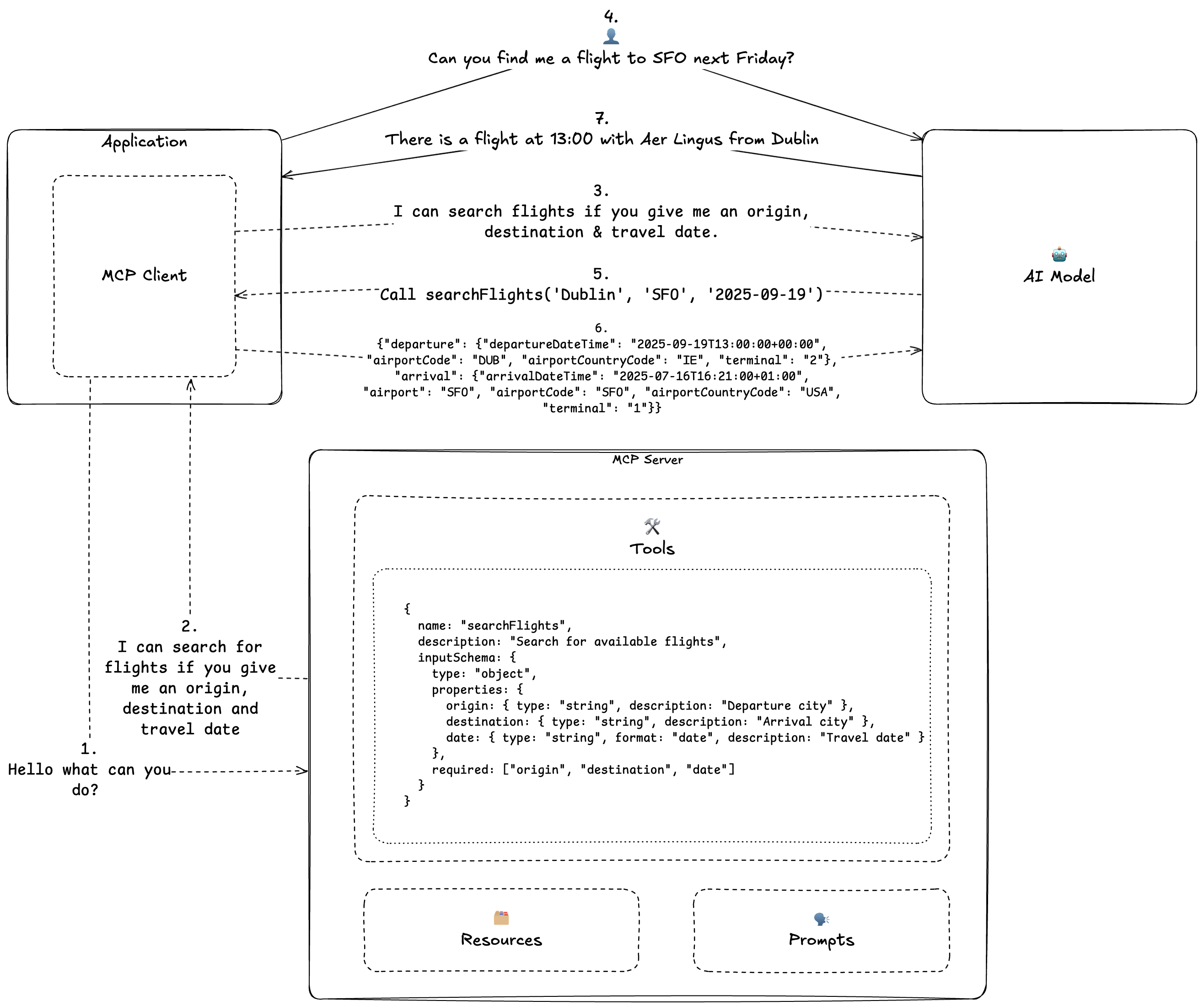

Tools are model-controlled; they:

AI applications tell the AI model about the tools available in connected MCP servers. Then, when the user sends a message to the AI model, the model has the ability to choose to use a tool if it sees fit to help answer the message—hence “model controlled.”

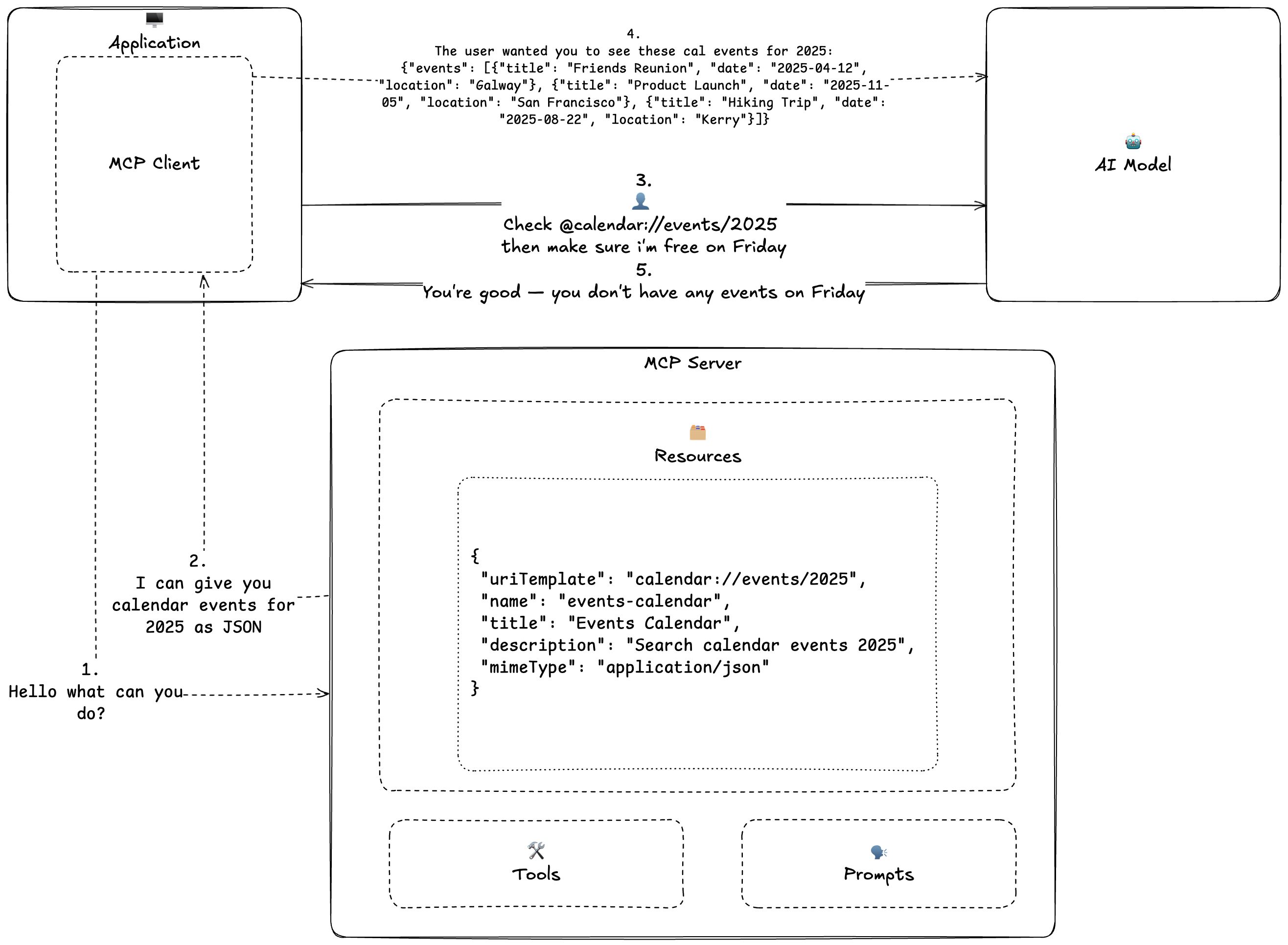

Resources are application-controlled; they:

AI applications provide methods for users to select resources via text commands (@resource or #resource) or attachment dropdowns. When a resource is selected by a user the app ensures that it is passed to the AI model as context—hence “application controlled”.

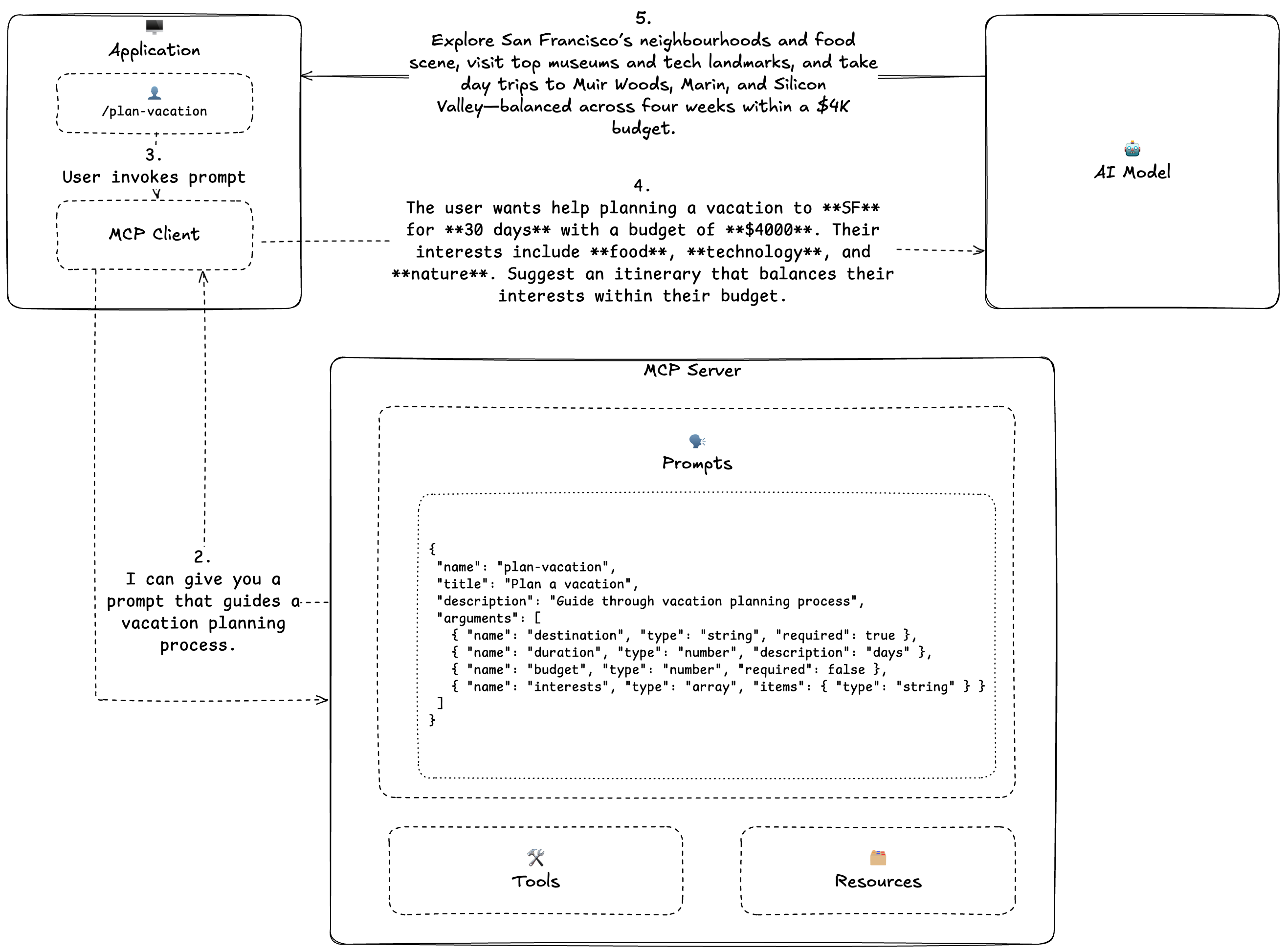

Prompts are user controlled they:

AI applications provide methods for users to invoke prompts often via text commands (/prompt). The easiest way to think about them is that they are simply canned natural language instructions for the AI model.

An MCP server for SaaS should expose the actions and data of the application.

It’s easy to think about this from a SaaS UI perspective.

| Actions | Forms & delete buttons |

| Data | Dashboards, reports & exports |

Create tools that call CRUD (Create, Read, Update, Delete) API endpoints. You can actually drop the Read for now but CRD isn’t a well-known acronym.

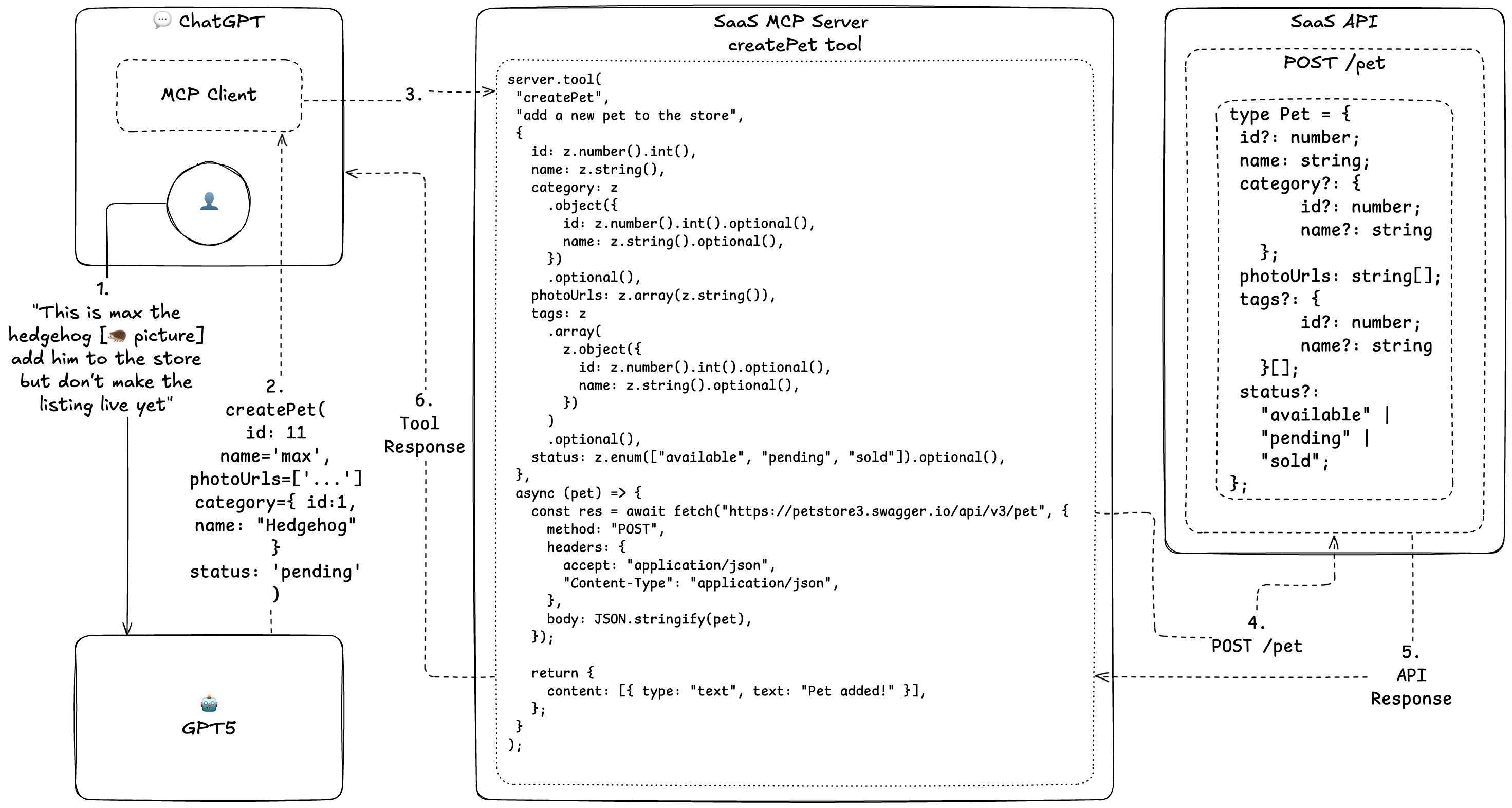

Let’s say we have a petstore SaaS API that can add a new pet to the store with [POST /pet]

We create a tool add-pet with description add a new pet to the store.

The AI model can call the tool which calls our API to create the pet based on the message/command sent by a user to an AI application.

This is the trickier of the two between exposing actions and data.

There are two approaches:

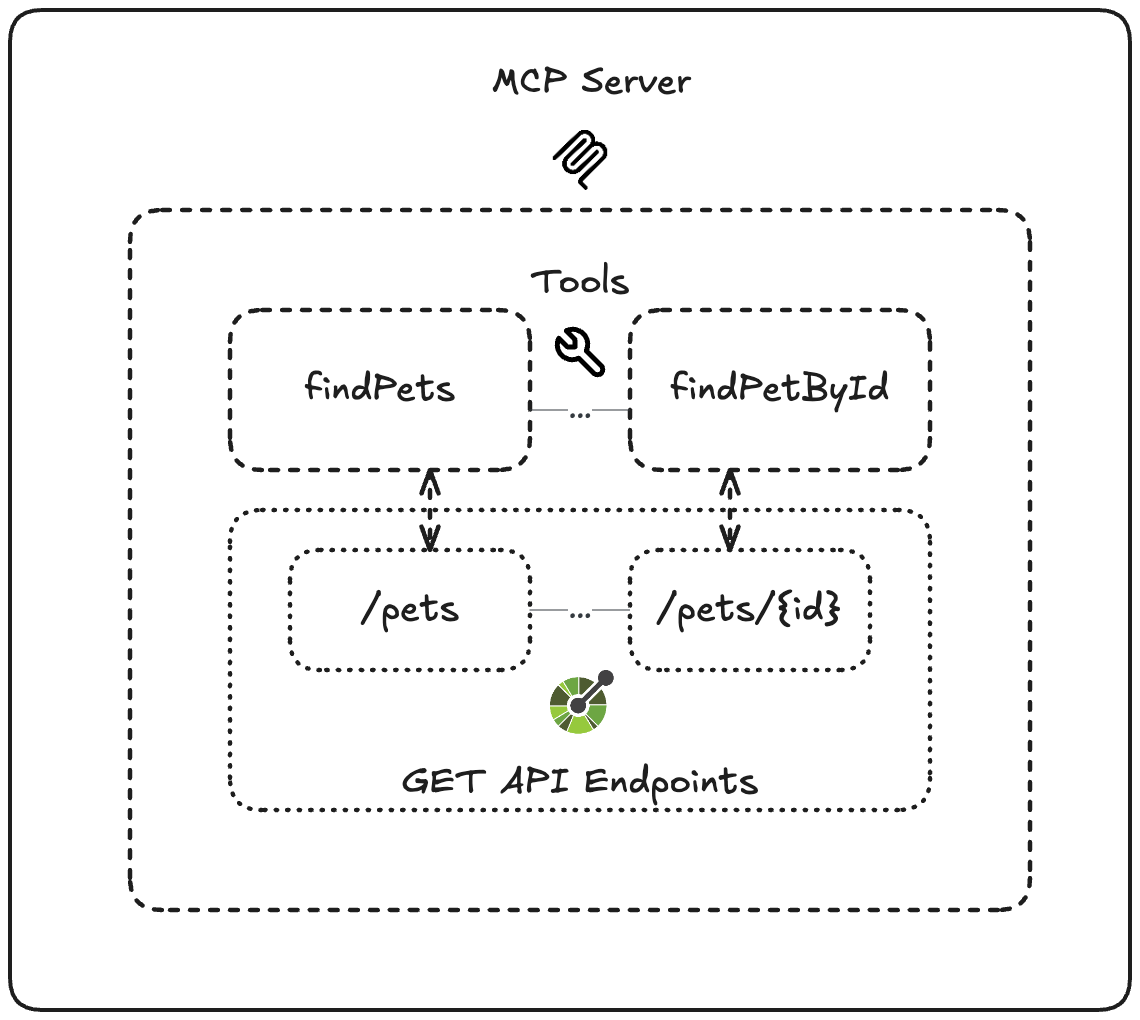

Load all of your API endpoints into the MCP server as tools and have the client decide which aggregation of tools it should use to fulfil user requests.

This works by effectively making your API directly callable by AI models through AI apps.

I think this is where a bunch of the confusion around “Isn’t an MCP just an API?” comes from.

Through the tool interface the model becomes aware of all endpoints and their input shapes/types.

In order for the model to extract data from your system it must figure out which (or which combination of) endpoint(s) it needs to call to retrieve the requested data.

This approach works well if you already have a highly composable API designed for data analysis — concretely, the API expresses any combination of filters, sorts, and transformations in a structured way. Think GraphQL or OData.

However, most SaaS APIs are designed for record management, not data analysis or flexible retrieval.

An MCP server with tools wrapping an API which isn’t sufficiently composable will break down when presented with the wide array of potential user queries which come with a natural language input.

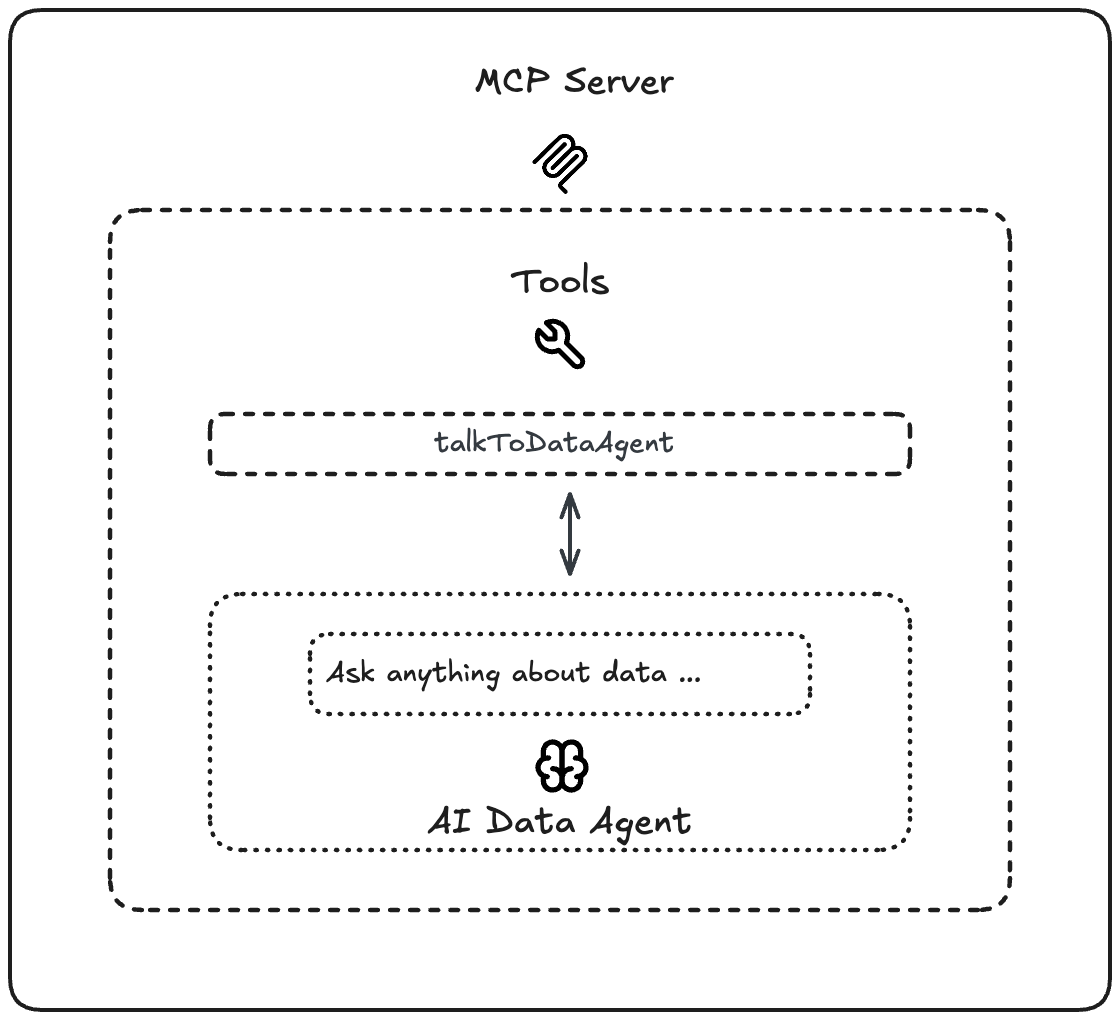

This way when the AI model calls the AI data agent tool it uses the most composable interface of all — natural language.

Then your AI data agent communicates with your database and dynamically generates queries based on the message passed to the tool.

Of course with great power comes great responsibility …

You will need to add deterministic guardrails to ensure no rogue SQL is generated via prompt injection or otherwise.

Also, because exposing data this way relies on natural language — you will need to support multi-turn conversations. You need to manage conversation state and intelligently handle follow-up questions within your AI data agent.

If you have a multi-tenant database you’ll also need to ensure that data scoping logic runs on each query. This must be built with deterministic code rather than an LLM which cannot be trusted to reliably scope data every time.

In the real-world there is often a difference between how data is stored and how that data is spoken about. In those cases, you will need to prompt the AI data agent with information that helps it to understand how to map from the semantics of the data to its storage formats. This is essentially a “Semantic Layer/Model” of the data which you will need to build in order to improve the AI data agent’s performance over time.

As always — you can’t manage what you can’t measure so you’ll need to log agent traces (all steps, decisions, and outputs of an agent) in order to debug and continuously improve.

Shameless plug, if you want all the power of this approach with none of the lift—Inconvo solves this.